If you’re still running CentOS 7 on your systems, you may have recently encountered errors when trying to run `yum update`.

http://mirror.centos.org/centos/7/os/x86_64/repodata/repomd.xml: [Errno 14] HTTP Error 404 - Not FoundThis error occurs because CentOS 7 reached its end-of-life (EOL) on June 30, 2024. As a result, the main CentOS mirrors no longer host packages for this version.

CentOS maintains an archive of older repositories at vault.centos.org. To fix the YUM update errors, we need to point our system to these archive repositories. You can do this using sed command. First take a backup of your current yum.repo.d folder.

cd /etc/

tar -cvf yum-backup.tar yum.repos.dDo a search and replace with sed command

sed -i 's/mirror\.centos\.org/vault.centos.org/g' /etc/yum.repos.d/*If your repo files are modified by server provider, above command may not fix it as repo urls are pointing to local repository provided by data center. In that case, you can edit file manually and use following config

vi /etc/yum.repos.d/CentOS-Base.repoIn the file, add following content

[base]

name=CentOS-7 - Base

baseurl=https://vault.centos.org/7.9.2009/os/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

[updates]

name=CentOS-7 - Updates

baseurl=https://vault.centos.org/7.9.2009/updates/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

[extras]

name=CentOS-7 - Extras

baseurl=https://vault.centos.org/7.9.2009/extras/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

[centosplus]

name=CentOS-7 - Plus

baseurl=https://vault.centos.org/7.9.2009/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7Clear the YUM cache and regenerate it:

yum clean all

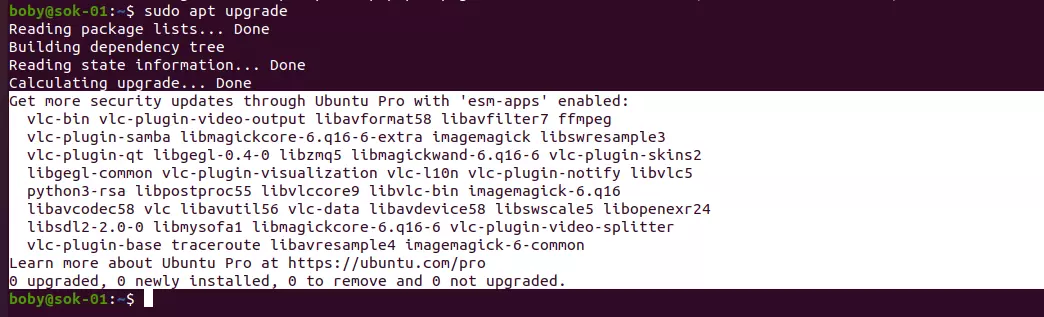

yum makecacheNow “yum update” command should work.

yum updateKeep using CentOS 7 is insecure. You should upgrade to RHEL 8 based OS like AlmaLinux 8 or RockyLinux to keep your server secure.

Back to CentOS