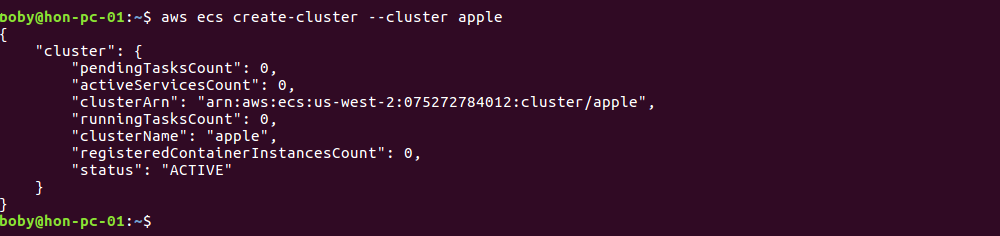

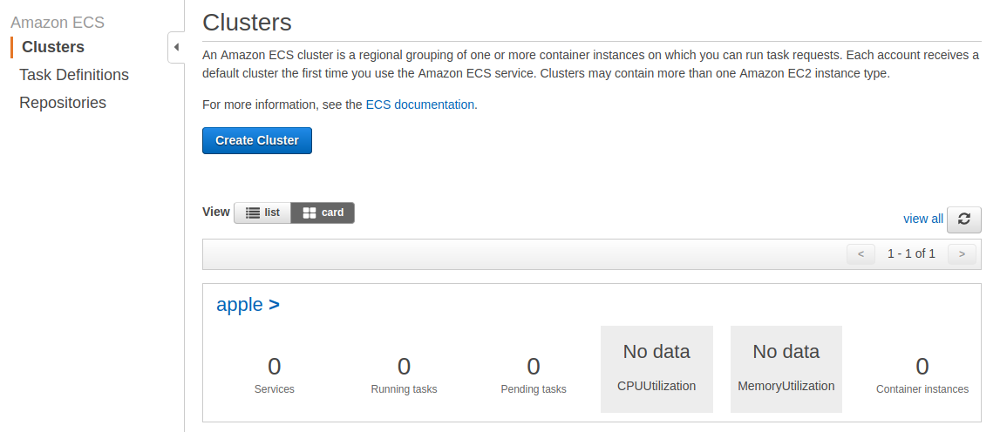

STEP 1: Creating a Cluster

First step is creating a cluster. This can be done with CLI or Amazon Console. To do in CLI, run

aws ecs create-cluster --cluster CLUSTER_NAME

If you login to Amazon ECS console, you will see newly created cluster.

If you want to delete cluster, you can do with command line

aws ecs delete-cluster --cluster CLUSTER_NAME

STEP 2: Creating Task Definition

Task definition tell Amazon ECS what docker image to run. It is like a blue print of task, not actual task. Once as Task Definition is created, you create Task based on it.

Create a file “task-def.json” with following content.

{

"containerDefinitions": [

{

"name": "wordpress",

"links": [

"mysql"

],

"image": "wordpress",

"essential": true,

"portMappings": [

{

"containerPort": 80,

"hostPort": 80

}

],

"memory": 500,

"cpu": 10

},

{

"environment": [

{

"name": "MYSQL_ROOT_PASSWORD",

"value": "password"

}

],

"name": "mysql",

"image": "mysql",

"cpu": 10,

"memory": 500,

"essential": true

}

],

"family": "serverok_blog"

}

Now run

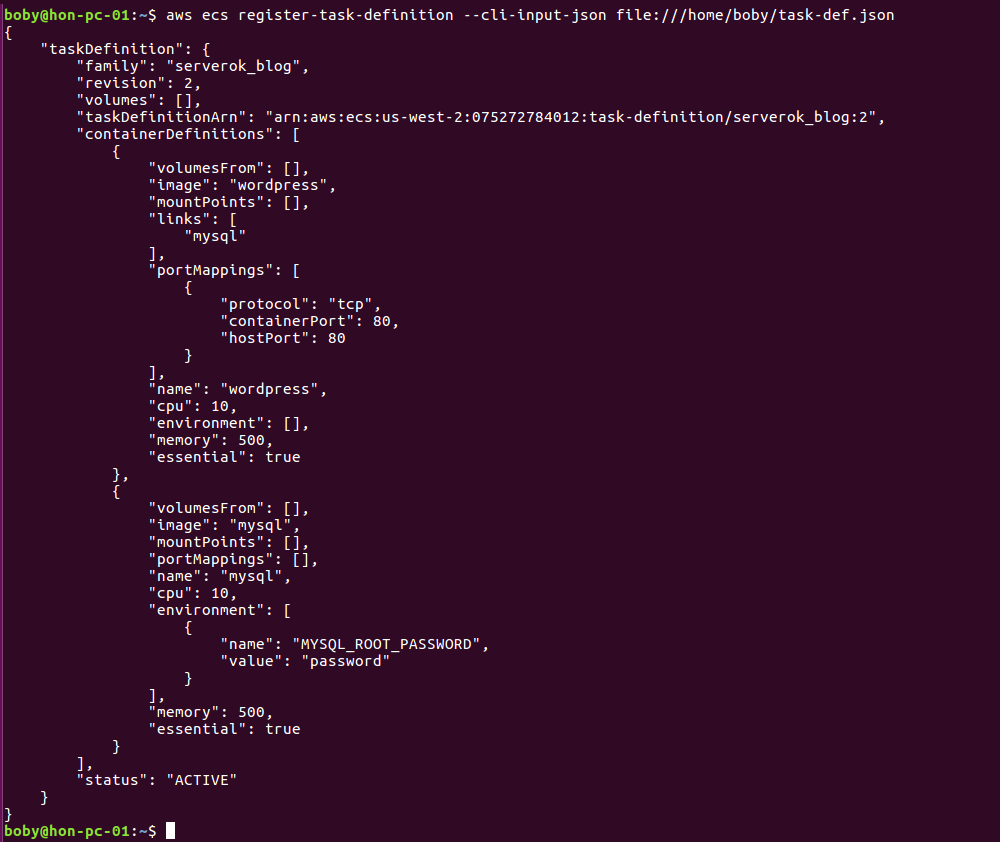

aws ecs register-task-definition --cli-input-json file:///path/to/task-def.json

You will see something like

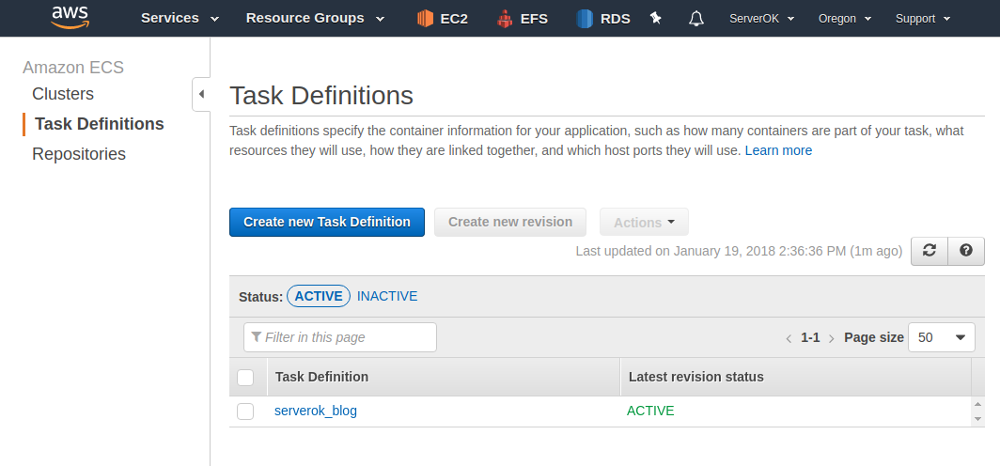

You will see newly created Task Definition under Amazon AWS Console > ECS > Task Definitions.

Updating Task Definition

To change Task Definition, edit the JSON file. Run exactly same command used to register the task definition, it will update the Task Definition.

In our Task Definition, we used password for MySQL “password”, let change it to secure password, run the command again to update Task Definition.

boby@hon-pc-01:~$ aws ecs register-task-definition --cli-input-json file:///home/boby/task-def.json

{

"taskDefinition": {

"family": "serverok_blog",

"containerDefinitions": [

{

"links": [

"mysql"

],

"essential": true,

"memory": 500,

"environment": [],

"cpu": 10,

"name": "wordpress",

"mountPoints": [],

"image": "wordpress",

"volumesFrom": [],

"portMappings": [

{

"containerPort": 80,

"protocol": "tcp",

"hostPort": 80

}

]

},

{

"essential": true,

"memory": 500,

"environment": [

{

"name": "MYSQL_ROOT_PASSWORD",

"value": "superman123"

}

],

"cpu": 10,

"name": "mysql",

"mountPoints": [],

"image": "mysql",

"volumesFrom": [],

"portMappings": []

}

],

"taskDefinitionArn": "arn:aws:ecs:us-west-2:075272784012:task-definition/serverok_blog:3",

"volumes": [],

"revision": 3,

"status": "ACTIVE"

}

}

boby@hon-pc-01:~$